Server Side Caching not working as intended #1779

Labels

No Label

dependencies

duplicate

help wanted

invalid

kind/bug

kind/feature

needs reproduction

question

security

wontfix

No Milestone

No Assignees

2 Participants

Notifications

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: vikunja/vikunja#1779

Loading…

Reference in New Issue

No description provided.

Delete Branch "%!s(<nil>)"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

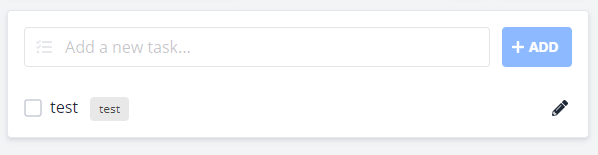

I found that when you have in-memory caching enabled on the server, task labels become duplicated. When you create a label, navigate away and then come back it duplicates the labels. Please see these 2 images for instance:

Here is my config.yml:

The first response from the server on the list page (before duplication) looks like the following code snippet. This is the request that shows a valid list/label group.

On the second request (after duplication) this what we recieve:

Disabling cache in the config.yml resolves the issue completely, even after multiple page changes/reloads (as expected):

It appears that the backend caching on the server side is keeping multiple records of the same data, without either updating or replacing (appending instead). I am not sure if this is a misconfiguration on my part. I am running the backend and frontend using docker, and a reverse proxy in front of both with the proper nginx rules setup.

Service Worker Caching & Config Caching Enabledto Server Side Caching not working as intendedThe caching is provided by the orm layer directly so maybe that actually is a bug in there somewhere.

Could you try redis to see if it makes any difference?

In general, I found the caching nice to have but the difference in speed is usually not noticable at all. I'm running my instance and the demo one without it enabled and am still seeing < 10ms response times for most things.

I agree caching for this type of content on the orm layer probably wont reduce the load times much, especially since the assets are being cached by the service worker. Either way it is offered for in-memory and not working as intended so I wanted to create a ticket.

At the end of the day caching wont really be required for me, even though it would be nice. I will give redis a try and get back to you.

Looks like redis may have its own issues going on:

Could be my implementation but I do not think so.. there is no authentication for this redis server, and it is a local docker container as well. Any ideas on that?

To make it a bit easier to read, this is the only error in that dump:

AFAIU even though this is an error it should still cache things. I've asked the xorm guys a while back about this but they didn't have a good idea either. Not quite sure where to look.

Does the issue you originally reported still happen?

In the (far away) future I'd like to get rid of the orm caching and cache stuff in Vikunja directly but there's a lot of other stuff that is more important than this so that wont happen any time in the next months.

This is a fresh install of redis, nothing else using the service.

Some more details from redis side of things:

keys*

and

The issue is still present with in-memory caching and it appears that redis caching does not work, at least not in a dockerized setup like this. Right now disabling caching is the best route.

I agree caching on the vikunja side would be optimal, but at the end of the day of course there are more important tasks. I suppose this will be one of those issues that remains open for a while lol

Yeah I guess this will be open for a while (that is, unless you want to dive in and send a PR 🙂 )

In a best case scenario this would get fixed by xorm without any doings on our side.

If I get time to look into redis/the memory caching I will submit a PR.. if I get anywhere. Between work and this masters program, development time has been sevearly reduced :(

That would be nice. I find it rarely happens though lol

Well at least you were able to reproduce it, that's something.

Closing this due to inactivity - please feel free to reopen if this is still relevant and reproducable with the latest version (on try for example).